The proGenomes2 project is a set of over 85,000 consistently annotated bacterial and archaeal genomes from over 12,000 species which provides a set of reference genomes across taxonomies and specific habitats, such as disease and food-related pathogens, and microbes from aquatic and soil environments. These databases offer excellent starting points for taxonomic profiling as they are unbiased and aim to span the diversity of the specific habitats. Unfortunately, the databases are not in a format that may be used directly within QIAGEN CLC Genomics Workbench, but with scripting, you can produce similar databases from within QIAGEN CLC using the proGenomes2 fasta files as a starting point. The headers of the proGenomes2 databases are constructed in the following way:

<taxid>.<biosample>.<nucleotide_id>

We use the biosample ID to find a set of assemblies in NCBI which we can download with the ‘Download Microbial Reference Database’ tool, including all information required for taxonomic profiling. First we need to find the desired database from http://progenomes.embl.de/data/, e.g. the sediment_mud specific database (but any other progenomes2 database hosted at this URL will work, replacing the definition of "URL" in the script below). With the following simple script we can stream the headers of that (gzipped) fasta file into the unique biosample IDs and use NCBI’s Eutils API to translate them into a set of unique assembly IDs and finally collect them into a file:

import sys, time, gzip, urllib.request import xml.etree.ElementTree as ET url="http://progenomes.embl.de/data/habitats/representatives.sediment_mud.contigs.fasta.gz" print("Downloading "+url[url.rfind("/")+1:]+" may take some time ... ", end="", flush=True) with gzip.GzipFile(fileobj=urllib.request.urlopen(url)) as f: l = list({ line.decode("UTF-8").split(".")[1] for iline, line in enumerate(f) if line.startswith(b">")}) print("Done") def request(query): i = 0 while True: try: return ET.fromstring(urllib.request.urlopen(query).read().decode("utf-8")) except Exception as e: if i > 5: print("Could not reach: "+query+"\nCheck connection: "+str(e)) exit(1) time.sleep(1) i+=1 assemblies = set() interval=50 for ibiosample in range(0,len(l),interval): biosample = "+OR+".join(bs for bs in l[ibiosample:min(ibiosample+interval,len(l))]) base="https://eutils.ncbi.nlm.nih.gov/entrez/eutils/" rparse = request(base + "esearch.fcgi?db=assembly&term="+biosample+"[biosample]&usehistory=y") query2 = base+"esummary.fcgi?db=assembly&query_key="+rparse.find("QueryKey").text+"&WebEnv="+rparse.find("WebEnv").text for res in request(query2).findall(".//AssemblyAccession"): assemblies.add(res.text[:res.text.find(".")]) print("Getting Assembly IDs from NCBI: {:.2f}%".format(min(ibiosample+interval, len(l))*100/len(l)),end="\r" if ibiosample+interval<len(l) else "\n") ofname = url[url.rfind("/")+1:].replace(".fasta.gz",".txt") print("Writing Assembly IDs to output file "+ofname) with open(ofname , 'w') as f: for assembly in sorted(assemblies): f.write(assembly+"\n")

To run this script, you need a standard installation of python3. All you need to do is copy and paste the content above, modify the URL (if necessary), save it to a file and execute it on your system. For example, you may save the file as "get_assembly_ids.py", then open a terminal and navigate to the folder where the script is located. Finally, execute it with:

| $python get_assembly_ids.py |

Running this script takes about 2 minutes (for the sediment_mud database), depending on your internet connection. The output will be a file called "representatives.sediment_mud.contigs.txt" placed in the same folder as the script containing the assembly IDs from which the respective progenomes2 database has been created (if you changed the URL, the name of the output file would be changed accordingly).

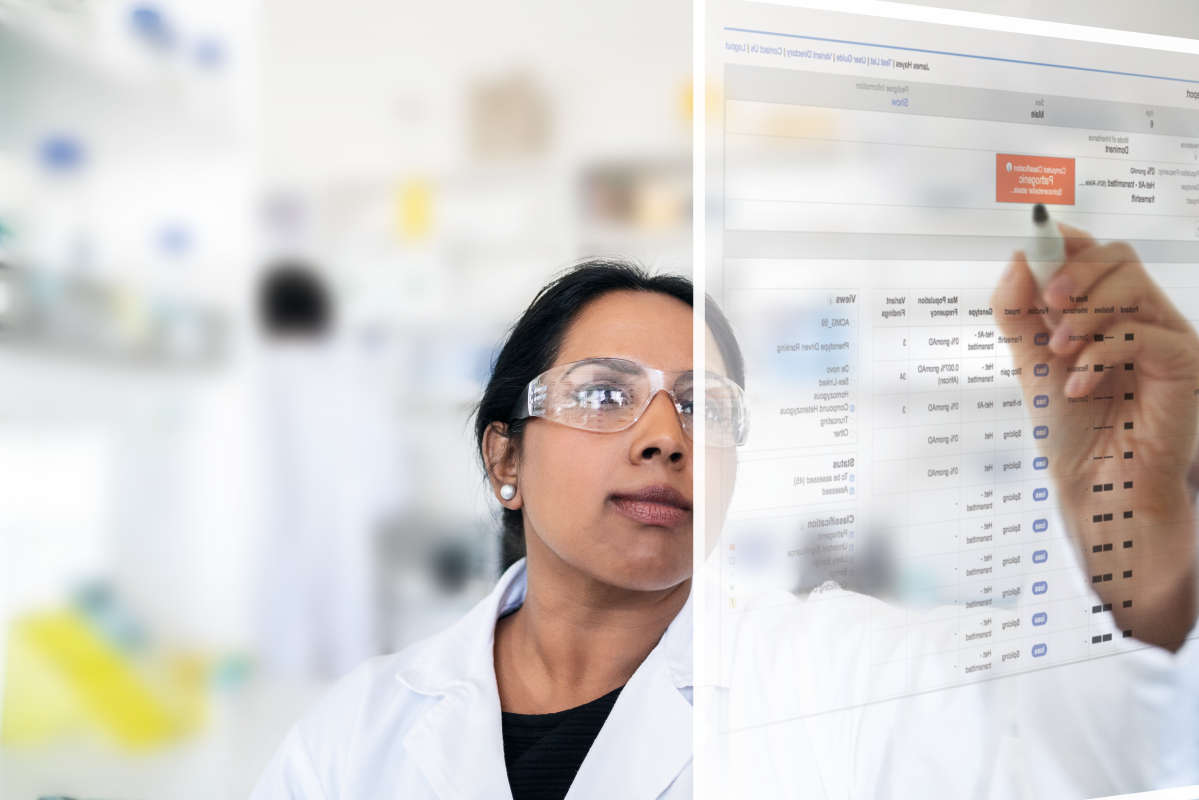

This file can now be used from within QIAGEN CLC Genomics Workbench (with the Microbial Genomics Module installed). Select Toolbox → Microbial Genomics Module → Databases → Taxonomic analysis → Download Microbial Reference Database and select "Create custom database" and "Include all" sequences.

After clicking next, it is possible to supply a file with Assembly Accession IDs. Select the file "representatives.sediment_mud.contigs.txt" we have just created and click "Finish".

This will create a "Database builder" where the assemblies from "representatives.sediment_mud.contigs.txt" have been selected and staged for download. The Database builder gives an overview of the selected references and provides an estimate of the download size.

By clicking "Download Selection" the download process is started and a sequence list is saved to the selected location. From this sequence list, a Taxonomic Profiling index can be constructed by running the Create Taxonomic Profiling Index tool.

Learn more about how QIAGEN CLC Genomics and QIAGEN CLC Genomics Workbench with the Microbial Genomics Module are powerful and scalable solutions to support all your genomics analysis needs.