Are you a researcher or data scientist working in drug discovery? If so, you depend on data to help you achieve unique insights by revealing patterns across experiments. Yet, not all data are created equal. The quality of data you use to inform your research is essential. For example, if you acquire data using natural language processing (NLP) or text mining, you may have a broad pool of data, but at the high cost of a relatively large number of errors (1).

As a drug development researcher, you’re also familiar with freely available datasets from public ‘omics data repositories. You rely on them to help you gain insights for your preclinical programs. These open-source datasets aggregated in portals such as The Cancer Genome Atlas (TCGA) and Gene Expression Omnibus (GEO) contain data from thousands of samples used to validate or redirect the discovery of gene signatures, biomarkers and therapies. In theory, access to so much experimental data should be an asset. But, because the data are unintegrated and inconsistent, they are not directly usable. So in practice, it’s costly, time-consuming and utterly inefficient to spend hours sifting through these portals to find the information required to clean up these data so you can use them.

Data you can use right away

Imagine how transformative it would be if you had direct access to ‘usable data’ that you could immediately understand and work with, without searching for additional information or having to clean and structure it. Data that is comprehensive yet accurate, reliable and analysis-ready. Data you can right away begin to convert into knowledge to drive your biomedical discoveries.

Creating usable data

Data curation has become an essential requirement in producing usable data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But data curation is not just time-consuming. It’s costly and challenging to scale as well, particularly if legacy datasets must be revised to match updated curation standards.

What if there were a team of experts to take on the manual curation of the data you need so researchers like you could focus on making discoveries?

Our experts have been curating biomedical and clinical data for over 25 years. We’ve made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly used third-party databases and ‘omics dataset repositories. Our human-certified data enables you to generate insights rather than collect and clean data. With our knowledge and databases, scientists like you can generate high-quality, novel hypotheses quickly and efficiently while using innovative and advanced approaches, including artificial intelligence.

Figure 1. Our workflow for processing 'omics data.

4 advantages of manually curated data

Our 200 dedicated curation experts follow these seven best practices for manual curation. Why do we apply so much manual effort to data curation? Based on our principles and practices for manual curation, here are the top reasons manually curated data is fundamental to your research success:

1. Metadata fields are unified, not redundant

Author-submitted metadata vary widely. Manual curation of field names can enforce alignment to a set of well-defined standards. Our curators identify hundreds of columns containing frequently-used information across studies and combine these data into unified columns to enhance cross-study analyses. This unification is evident in our TCGA metadata dictionary unification is evident in our TCGA metadata dictionary, for example, where we unified into a single field the five different fields that were used to indicate TCGA samples with a cancer diagnosis of a first-degree family member.

2. Data labels are clear and consistent

Unfortunately, it’s common that published datasets provide vague abbreviations as labels for patient groups, tissue type, drugs or other main elements. If you want to develop successful hypotheses from these data, it’s critical you understand the intended meaning and relationship among labels. Our curators take the time to investigate each study and precisely and accurately apply labels so that you can group and compare the data in the study with other relevant studies.

3. Additional contextual information and analysis

Properly labeled data enables scientifically meaningful comparisons between sample groups to reveal biomarkers. Our scientists are committed to expert manual curation and scientific review, which includes generating statistical models to reveal differential expression patterns. In addition to calculating differential expression between sample groups defined by the authors, our scientists perform custom statistical comparisons to support additional insights from the data.

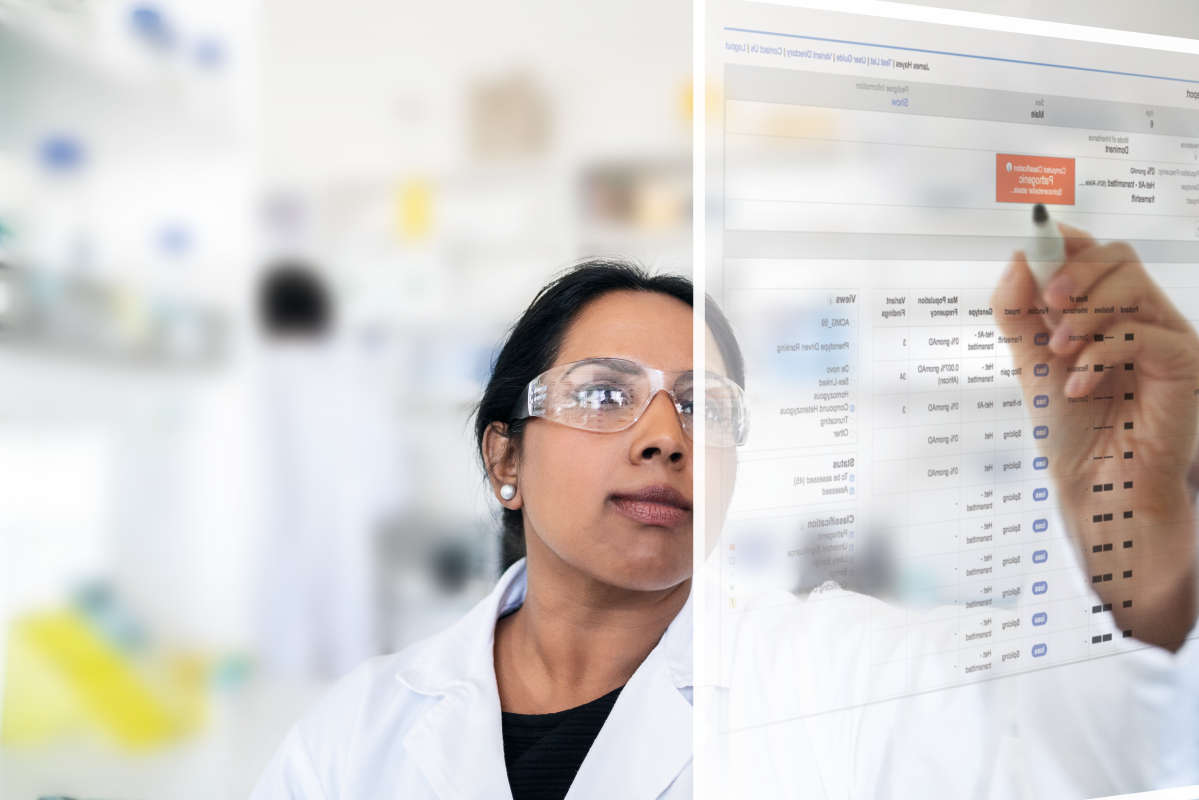

4. Author errors are detected

No matter how consistent data labels are, NLP processes cannot identify misassigned sample groups, and such errors are devastating to data analysis. Unfortunately, it’s not unheard of that data are rendered uninterpretable due to conflicts in sample labeling presented in a publication versus its corresponding entry in a public ‘omics data repository. As shown in Figure 2, for a given Patient ID, both ‘Age’ and ‘Genetic Subtype’ are mismatched between the study’s GEO entry and publication table; which sample labels are correct? Our curators identify these issues and work with authors to correct errors before including the data in our databases.

Figure 2. In this submission to NCBI GEO, the ages of the various patients conflict between the GEO submission and the associated publication. What’s more, the genetic subtype labels are mixed up. Without resolving these errors, the data cannot be used. This attention to detail is required, and can only be achieved with manual curation.

At the core of our curation process, curators apply scientific expertise, controlled vocabularies and standardized formatting to all applicable metadata. The result is that you can quickly and easily find all applicable samples across data sources using simplified search criteria.

Dig deeper into the value of QIAGEN Digital Insights’ manual curation process

Ready to incorporate into your research the reliable biomedical, clinical and ‘omics data we’ve developed using manual curation best practices? Explore our QIAGEN knowledge and databases, and request a consultation to find out how our manually curated data will save you time and enable you to develop quicker, more reliable hypotheses. Learn more about the costs of free data in our industry report and download our unique and comprehensive metadata dictionary of clinical covariates to experience first-hand just how valuable manual curation really is.

References: