With the scientific research community publishing over two million peer-reviewed articles every year since 2012 (1) and next-generation sequencing fueling a data explosion, the need for comprehensive yet accurate, reliable and analysis-ready information on the path to biomedical discoveries is now more pressing than ever.

Manual curation has become an essential requirement in producing such data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But manual curation is not just time-consuming. It is costly and challenging to scale as well.

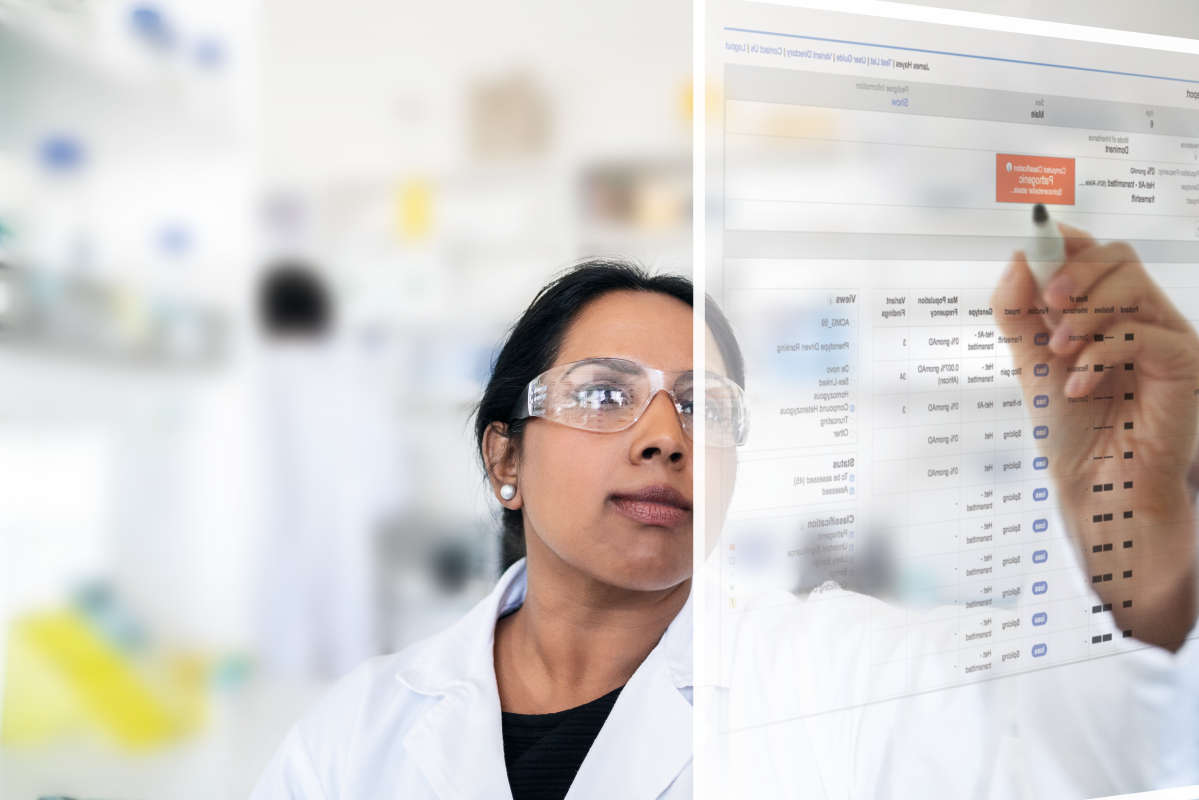

We at QIAGEN take on the task of manual curation so researchers like you can focus on making discoveries. Our human-certified data enables you to concentrate on generating insights rather than collecting data. QIAGEN has been curating biomedical and clinical data for over 25 years. We've made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly-used third-party databases and 'omics dataset repositories. With our knowledge and databases, scientists can generate high-quality, novel hypotheses quickly and efficiently, while using innovative and advanced approaches, including artificial intelligence.

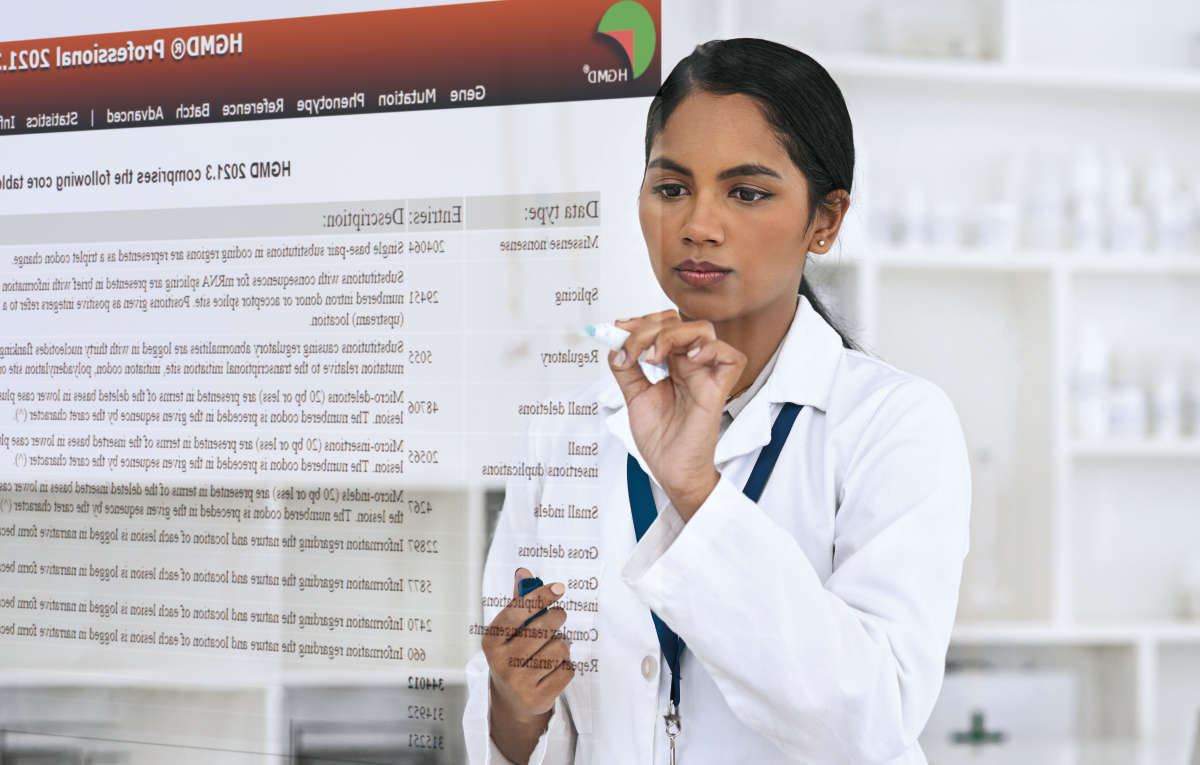

Here are seven best practices for manual curation that QIAGEN's 200 dedicated curation experts follow, which we presented at the November 2021 Pistoia Alliance event.

These principles ensure that our knowledge base and integrated 'omics database deliver timely, highly accurate, reliable and analysis-ready data. In our experience, 40% of public ‘omics datasets include typos or other potentially critical errors in an essential element (cell lines, treatments, etc.); 5% require us to contact the authors to resolve inconsistent terms, mislabeled treatments or infections, inaccurate sample groups or errors mapping subjects to samples. Thanks to our stringent manual curation processes, we can correct such errors.

Our extensive investment in high-quality manual curation means that scientists like you don't need to spend 80% of their time aggregating and cleaning data. We've scaled our rigorous manual curation procedures to collect and structure accurate and reliable information from many different sources, from journal articles to drug labels to 'omics datasets. In short, we accelerate your journey to comprehensive yet accurate, reliable and analysis-ready data.

Ready to get your hands on reliable biomedical, clinical and 'omics data that we've manually curated using these best practices? Learn about QIAGEN knowledge and databases, and request a consultation to find out how our accurate and reliable data will save you time and get you quick answers to your questions.

References: